What Is Sputtering Equipment?

Sputtering equipment is a device that performs sputtering to create a very thin film uniformly on the surface of an object.

Sputtering is a physical vapor deposition (PVD) method, like vacuum evaporation and ion plating. Sputtering is used in various fields, including the deposition of semiconductor and liquid crystal films. It is also used to clean the surface of objects.

Uses of Sputtering Equipment

Sputtering equipment is used to fabricate thin films for semiconductors, liquid crystals, and plasma displays. Compared to other PVD evaporation systems, sputtering equipment is capable of depositing metals and alloys with high melting points, and thus has a wide range of applications.

Recently, metals have been deposited on the surface of plastic, glass, and film to make them conductive and used as transparent electrodes and wiring for touch panels. This, further expands the range of applications for sputtering equipment.

In addition, medical devices and miscellaneous goods with antibacterial properties coated with photocatalytic titanium dioxide on their surfaces are also available. It is also used in analytical applications, such as sample preparation for scanning electron microscopes (SEM).

Structure of Sputtering Equipment

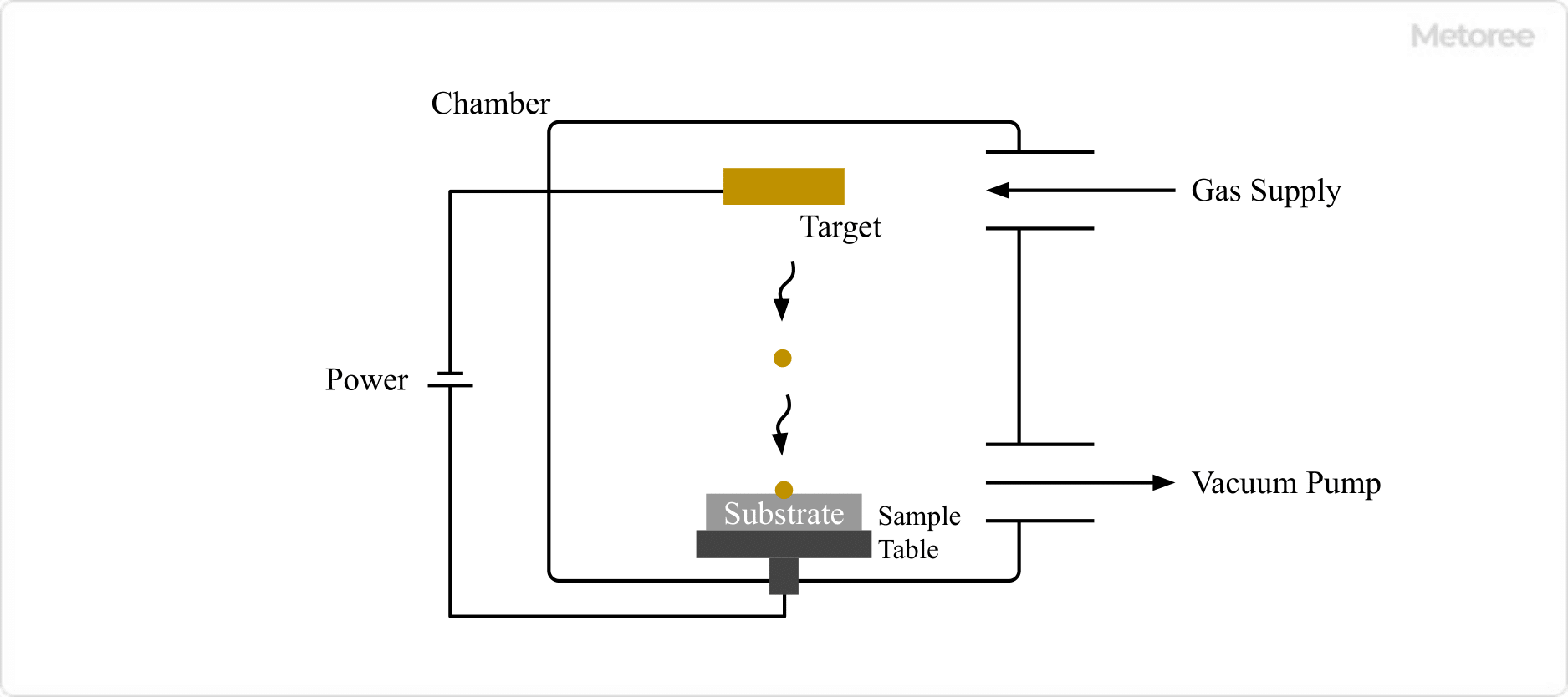

Sputtering equipment mainly consists of the following components:

- Vacuum chamber

- Sample stand

- Sputtering target

- Exhaust system (rotary pump, etc.)

- Gas supply system

- Power supply (high frequency, High-voltage power supply, etc.)

The vacuum chamber contains a sample stand that holds the substrate and a sputtering target that supplies the sputtering material, while the vacuum pump and gas supply system are connected to the chamber.

Principle of Sputtering Equipment

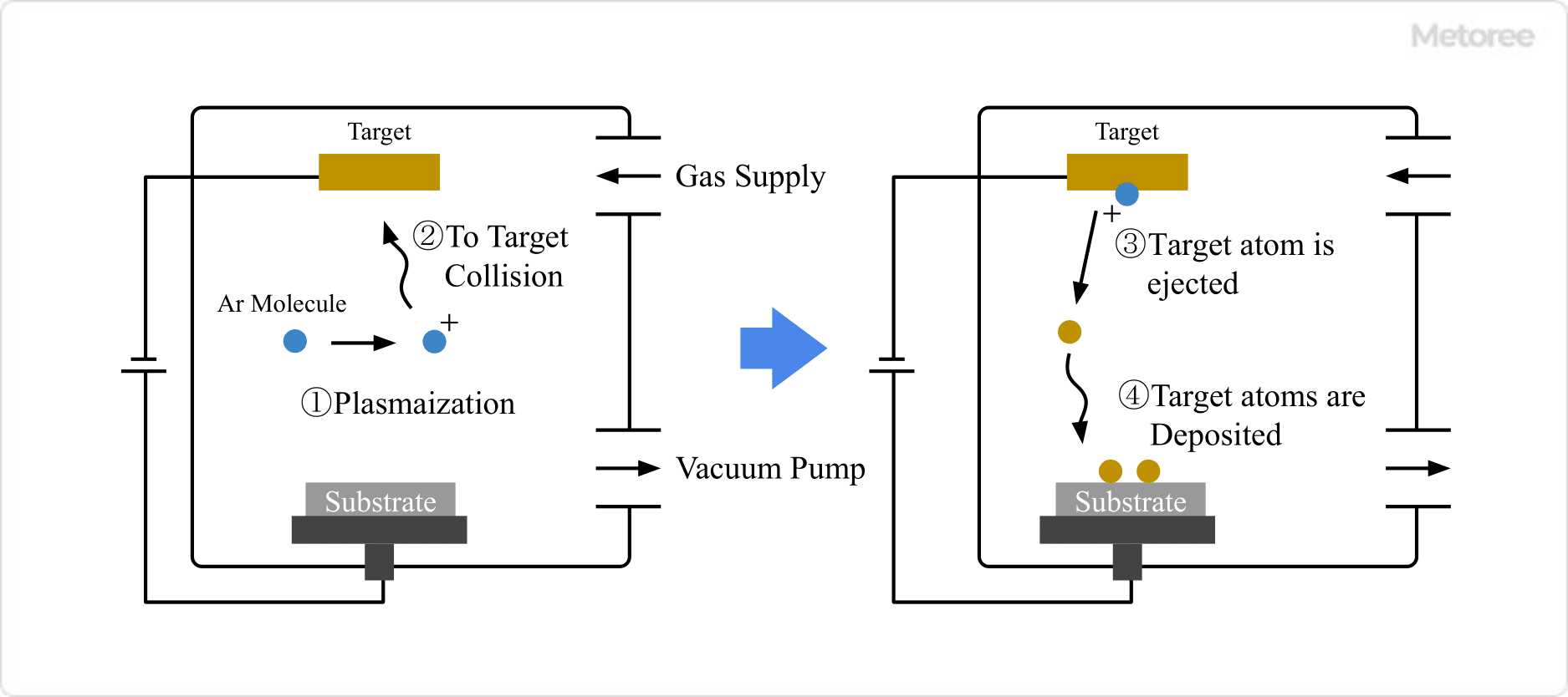

The principle of sputtering equipment is to deposit a film on the surface of an object by applying high voltage under vacuum and repelling the atoms of the film material. First, the chamber is sufficiently depressurized by a pump, and then inert gases such as argon are filled into the equipment at a constant pressure.

When a high negative voltage is applied to the target, which is the material for the thin film, and a glow discharge is generated, the argon filled in the equipment becomes plasma and collides with the target on the cathode, causing the Ions, atoms and molecules on the target to be ejected. The target atoms are then deposited on the surface of the target to which the positive voltage is applied, resulting in the production of a thin film.

Types of Sputtering Equipment

There are various types of sputtering methods.

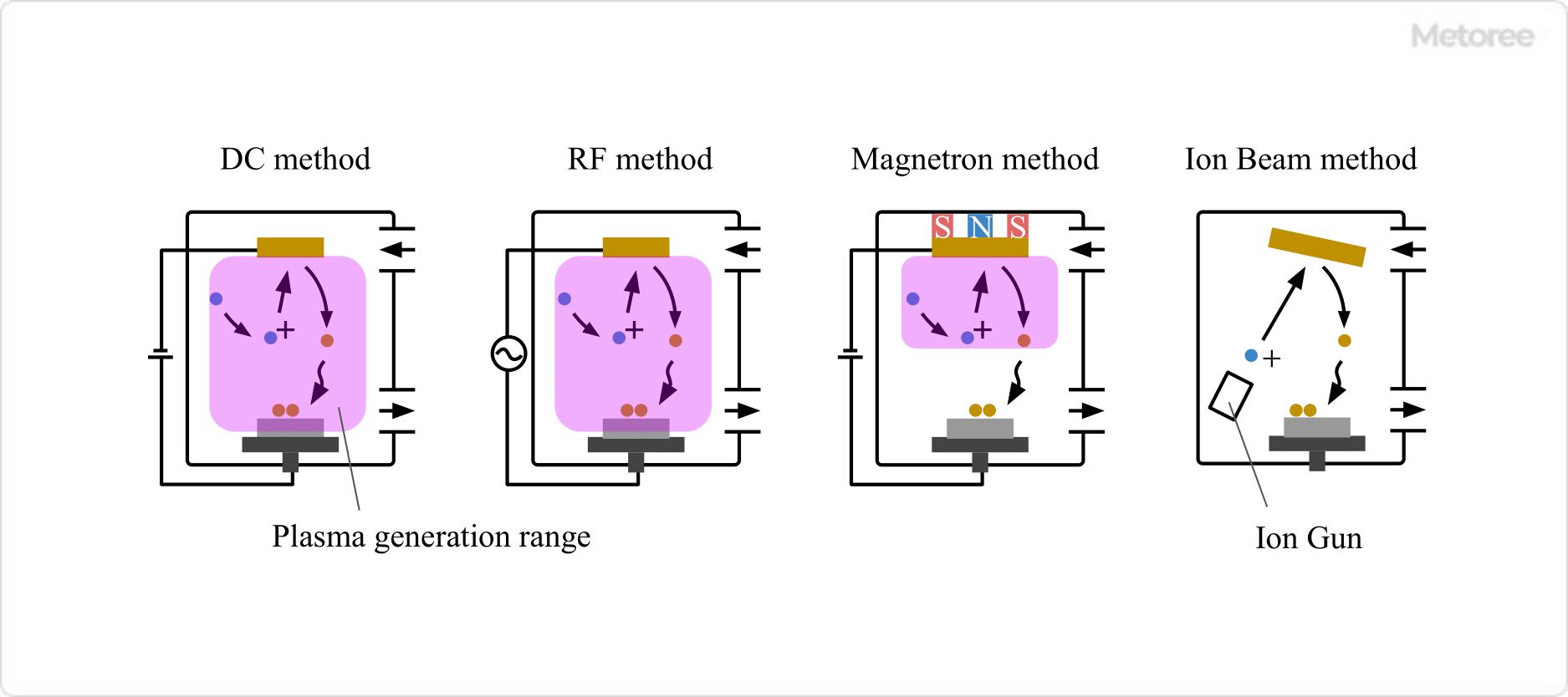

1. DC Method

This method applies DC voltage between electrodes. This method has various advantages, such as a simple structure. However, the disadvantages include the possibility that the sample may be damaged by the high-temperature plasma and the inability to form a film properly if the sputtering target is an insulator.

2. RF Method

This method applies a high-frequency AC voltage between electrodes to form films on materials such as ceramics, silica and other oxides, metal oxides, and nitrides, which cannot be formed by the DC method.

3. Magnetron Method

This method uses a magnet to create a magnetic field on the target side to keep the plasma near the target. This not only reduces damage to the sample caused by the plasma, but also increases the plasma generation speed, resulting in faster film formation. Various power supply methods are available, including DC, AC, and high-frequency AC. On the other hand, the target is reduced unevenly and the utilization efficiency tends to be low.

4. Ion beam Method

Ions are produced at a separate location from the target or sample and accelerated to the target. Since there is no discharge in the chamber, the effect on the sample is minimized and there is no need to consider the adhesion of impurities or the conductivity of the target.

In addition to the above, there are various types of sputtering equipment, such as Electron Cyclotron (ECR), which should be selected appropriately according to the application and budget.

Other Information on Sputtering Equipment

Features of Sputtering Equipment

Sputtering equipment can make the film thickness uniform, and since it uses electrical properties, it can increase the strength of the film. It can produce films of high-melting-point metals and alloy materials, which is difficult with other PVD methods. Another method is to fill oxygen instead of argon or other inert gases to deposit oxide films.

However, there are some disadvantages, such as the longer time required for film deposition compared to other PVD methods and the risk of damaging the sputtering target by the generated plasma.

A

A

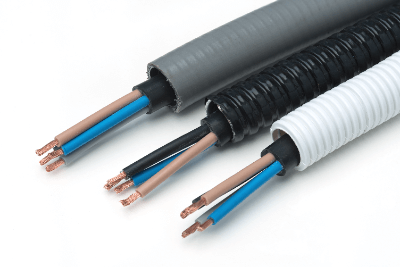

A Cable Rack is a rack for laying cables on.

A Cable Rack is a rack for laying cables on.